AI in healthcare: what are the risks to the NHS?

BBC

BBCAt a time when there are more than seven million patients on NHS waiting lists in England and around 100,000 staff posts vacant, artificial intelligence could revolutionise healthcare by improving patient care and freeing up staff time.

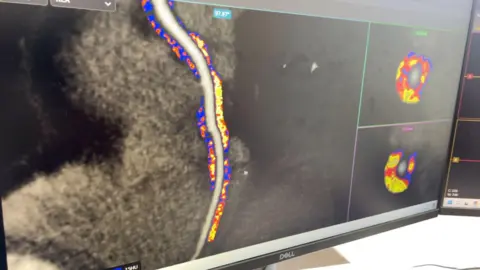

Its uses are varied – from detecting risk factors to help prevent chronic diseases such as heart attack, stroke and diabetes, to assisting doctors by analysing scans and X-rays to speed up diagnosis.

This technology is also maximising productivity, from automated voice assistants to performing routine administrative tasks such as scheduling appointments and recording doctors’ consultation notes.

‘transformative’

Generative AI – a type of artificial intelligence that can produce a variety of content including text and images – will be transformational for patient outcomes, according to Sir John Bell, senior government adviser on life sciences.

Sir John is President of the Oxford-based Allinson Institute of Technology – a leading innovative research and development institute investigating global issues including the use of AI in healthcare.

He says generative AI will improve the accuracy of diagnostic scans and predict patient outcomes under different medical interventions, allowing for more informed, personalised treatment decisions.

But he warned that researchers should not work in isolation, but rather innovation must be shared equally across the country so that some communities are not left behind.

“To achieve these benefits the NHS must unlock the enormous value currently trapped in data silos, to do good while protecting against harm,” says Sir John.

“Allowing AI access to all data in a safe and secure research environment will improve the representativeness, accuracy and equity of AI tools to benefit all sectors of society, reducing the financial and economic burden of running a world-leading National Health Service and creating a healthier nation.”

Allinson Institute of Technology

Allinson Institute of Technology‘mitigate risk’

AI opens up a world of possibilities, but it also brings risks and challenges, such as maintaining accuracy. The results still need to be verified by trained staff.

The government is currently evaluating generative AI for use in the NHS – one issue is that it can sometimes cause “confusion” and generate content that is not authenticated.

Dr Caroline Green, from the Institute for Ethics in AI at the University of Oxford, knows that some health and care workers use models like ChatGPT to get advice.

“It’s important that people using these devices are properly trained to do so, meaning that they understand and know how to mitigate the risks posed by technical limitations… such as the potential for incorrect information to be provided,” she says.

She believes it is important to involve people working in health and social care, patients and other organisations in the development of generative AI, and to assess any impacts with them in order to build trust.

Dr Green says some patients have decided to de-register with their GPs due to fears about how AI might be used in their healthcare and how their personal information might be shared.

“This means that these individuals will not be able to access essential health services in the future and will be deprived,” she says.

Follow BBC South Facebook, X (Twitter)Or Instagram. Submit your story ideas here south.newsonline@bbc.co.uk or through WhatsApp to 0808 100 2240,